Hackers can silently access Siri and Google Now on your phone

Siri can be silently put to work against you, thanks to a new hack discovered by French security researchers that allows attackers to transmit orders to the digital assistant via a radio.

The hack also works on Google Now and utilizes the headphone cord as an antenna to convert electromagnetic waves into signals that register in iOS and Android as audio coming from the microphone. José Lopes Esteves and Chaouki Kasmie — the two French researchers that discovered the hack — wrote in their paper that hackers could use the attack to get Siri and Google Now to send the phone’s browser to a malware site, or send spam and phishing messages to friends.

“The sky is the limit here,” says Vincent Strubel, the director of their research group at ANSSI. “Everything you can do through the voice interface you can do remotely and discreetly through electromagnetic waves.”

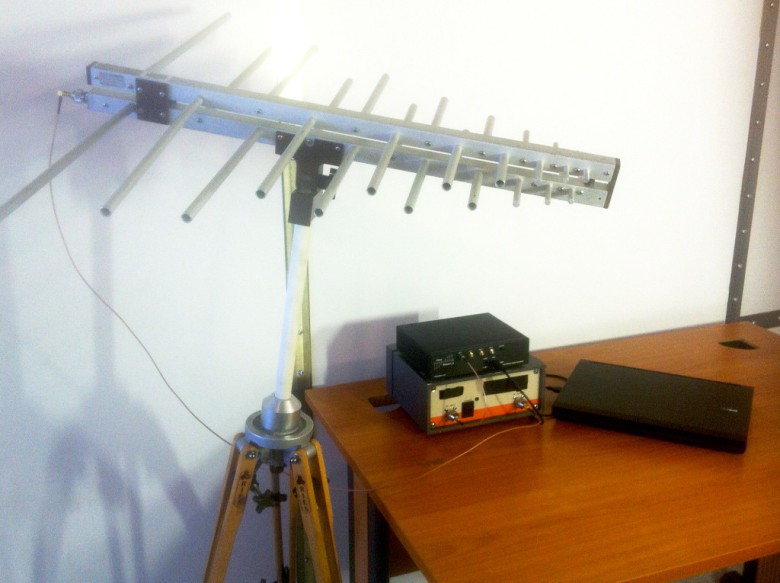

Sounds dangerous right? Well the radio hack has a few key limitations. Mainly, how close an attacker has to get to a victim to broadcast the electromagnetic waves. Also, you need some bulky gear to do it. Here’s a pic of the tools needed for the job:

The hack uses a laptop running GNU Radio, a USRP software defined radio, an amplifier, and an antenna to generate electromagnetic waves. Researchers say they could stuff it all into a backpack and get a range of 6.5 feet. Or a more powerful version using large batteries could fit in a van and extend the range to a whopping 16 feet. Not exactly discreet.

Pulling off the hack would also require that victims’ microphone-enabled headphones be plugged in. Siri has been around since the iPhone 4s, but a lot of Android phones still don’t have Google Now. It also requires victims to be completely inattentive and unable to see that Siri was being turned into a zombie.

Even though it’s highly unlikely that hackers would use the French researchers’ method because there are better alternatives that don’t require you to be within 6 feet, it’s still an impressively clever hack. Google and Apple were both notified of the vulnerability by the researchers who advise adding better shielding to headphone cords, or to let users create their own custom wake words for Siri and Google Now.