Google’s amazing neural network tells you where any photo was taken

Google’s neural network continues to amaze. With a new deep-learning machine called PlaNet, which has been trained by 126 million images and their accompanying EXIF data, it has picked up the “superhuman” ability to pinpoint the location of almost any photo.

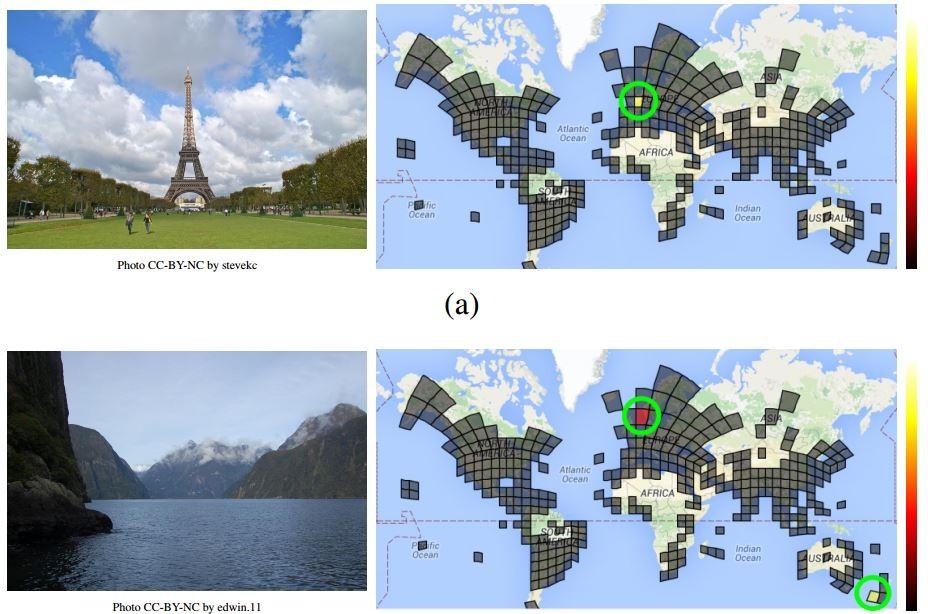

A team led by Tobias Weyand, a computer vision specialist at Google, created PlaNet by taking all of those images and using them to divide the world into a grid of over 26,000 squares. The size of those squares varies depending on how many images are associated with each location.

For instance, big cities like New York City, where lots of photos are taken every day, have “a more fine-grained grid structure than more remote regions where photographs are less common,” explains MIT Technology Review. Google has ignored oceans and the polar regions.

Using 91 million of the images, the team was able to teach PlaNet to work out the grid location using only the image itself. The result is a machine that can pinpoint the location or the likely candidates when it is fed a photo.

The team tested PlaNet using 2.3 million geotagged images from Flickr. It was able to locate 3.6 percent of them with “street-level accuracy,” Weyand says, and another 10.1 percent at city-level accuracy. PlaNet could determine the country of origin of 28.4 photos, and the content of 48 percent.

PlaNet was then put to the test against humans, which have the ability to locate images using all kinds of cues, including street signs, architectural styles, and even the type of vegetation.

“Weyand and co put PlaNet through its paces in a test against 10 well-traveled humans,” adds MIT. “For the test, they used an online game that presents a player with a random view taken from Google Street View and asks him or her to pinpoint its location on a map of the world.”

PlaNet was able to beat the human players by winning 28 of the 50 rounds with a median localization error of 1131.7 km, while the median human localization error was 2320.75 km.

“[This] small-scale experiment shows that PlaNet reaches superhuman performance at the task of geolocating Street View scenes,” said Weyand’s team.

“We think PlaNet has an advantage over humans because it has seen many more places than any human can ever visit and has learned subtle cues of different scenes that are even hard for a well-traveled human to distinguish,” they add.

PlaNet can even locate images taken indoors by using similar photos that are tied to albums that have location data attached. What’s most impressive about the machine is that it requires just 377MB of space, which means it would easily fit on your smartphone or tablet.

- SourceMIT Technology Review